使用 AWS CloudFormation 和 Amazon SageMaker 进行语义搜索

本教程展示了如何使用 AWS CloudFormation 和 Amazon SageMaker 在 Amazon OpenSearch Service 中实现语义搜索。有关更多信息,请参阅语义搜索。

如果您使用的是自托管 OpenSearch 而非 Amazon OpenSearch Service,请使用蓝图为 Amazon SageMaker 模型创建连接器。有关创建连接器的更多信息,请参阅连接器。

CloudFormation 集成自动化了使用 SageMaker 嵌入模型进行语义搜索教程中的步骤。CloudFormation 模板创建一个 IAM 角色并调用 AWS Lambda 函数来设置 AI 连接器和模型。

将以 your_ 为前缀的占位符替换为您自己的值。

模型输入和输出要求

确保您的 Amazon SageMaker 模型输入遵循默认预处理函数所需的格式。

模型输入必须是字符串数组

["hello world", "how are you"]

此外,请确保模型输出遵循默认后处理函数所需的格式。模型输出必须是数组的数组,其中每个内部数组对应于输入字符串的嵌入

[

[

-0.048237994,

-0.07612697,

...

],

[

0.32621247,

0.02328475,

...

]

]

如果您的模型输入/输出与所需默认值不同,您可以使用 Painless 脚本构建自己的预处理/后处理函数。

示例:Amazon Bedrock Titan 嵌入模型

例如,Amazon Bedrock Titan 嵌入模型(蓝图)输入如下

{ "inputText": "your_input_text" }

OpenSearch 预期以下输入格式

{ "text_docs": [ "your_input_text1", "your_input_text2"] }

要将 text_docs 转换为 inputText,您必须定义以下预处理函数

"pre_process_function": """

StringBuilder builder = new StringBuilder();

builder.append("\"");

String first = params.text_docs[0];// Get the first doc, ml-commons will iterate all docs

builder.append(first);

builder.append("\"");

def parameters = "{" +"\"inputText\":" + builder + "}"; // This is the Bedrock Titan embedding model input

return "{" +"\"parameters\":" + parameters + "}";"""

默认的 Amazon Bedrock Titan 嵌入模型输出具有以下格式

{

"embedding": <float_array>

}

但是,OpenSearch 预期以下格式

{

"name": "sentence_embedding",

"data_type": "FLOAT32",

"shape": [ <embedding_size> ],

"data": <float_array>

}

要将 Amazon Bedrock Titan 嵌入模型输出转换为 OpenSearch 预期的格式,您必须定义以下后处理函数

"post_process_function": """

def name = "sentence_embedding";

def dataType = "FLOAT32";

if (params.embedding == null || params.embedding.length == 0) {

return params.message;

}

def shape = [params.embedding.length];

def json = "{" +

"\"name\":\"" + name + "\"," +

"\"data_type\":\"" + dataType + "\"," +

"\"shape\":" + shape + "," +

"\"data\":" + params.embedding +

"}";

return json;

"""

先决条件:创建 OpenSearch 集群

前往 Amazon OpenSearch Service 控制台并创建一个 OpenSearch 域。

记下域 Amazon Resource Name (ARN);您将在后续步骤中使用它。

步骤 1:映射后端角色

OpenSearch CloudFormation 模板使用 Lambda 函数通过 AWS Identity and Access Management (IAM) 角色创建 AI 连接器。您必须将 IAM 角色映射到 ml_full_access 以授予所需权限。请按照使用 SageMaker 嵌入模型进行语义搜索教程的步骤 2.2 映射后端角色。

IAM 角色在 CloudFormation 模板的Lambda Invoke OpenSearch ML Commons Role Name字段中指定。默认 IAM 角色是 LambdaInvokeOpenSearchMLCommonsRole,因此您必须将 arn:aws:iam::your_aws_account_id:role/LambdaInvokeOpenSearchMLCommonsRole 后端角色映射到 ml_full_access。

为了更广泛的映射,您可以使用通配符授予所有角色 ml_full_access

arn:aws:iam::your_aws_account_id:role/*

因为 all_access 包含比 ml_full_access 更多的权限,所以将后端角色映射到 all_access 也是可接受的。

步骤 2:运行 CloudFormation 模板

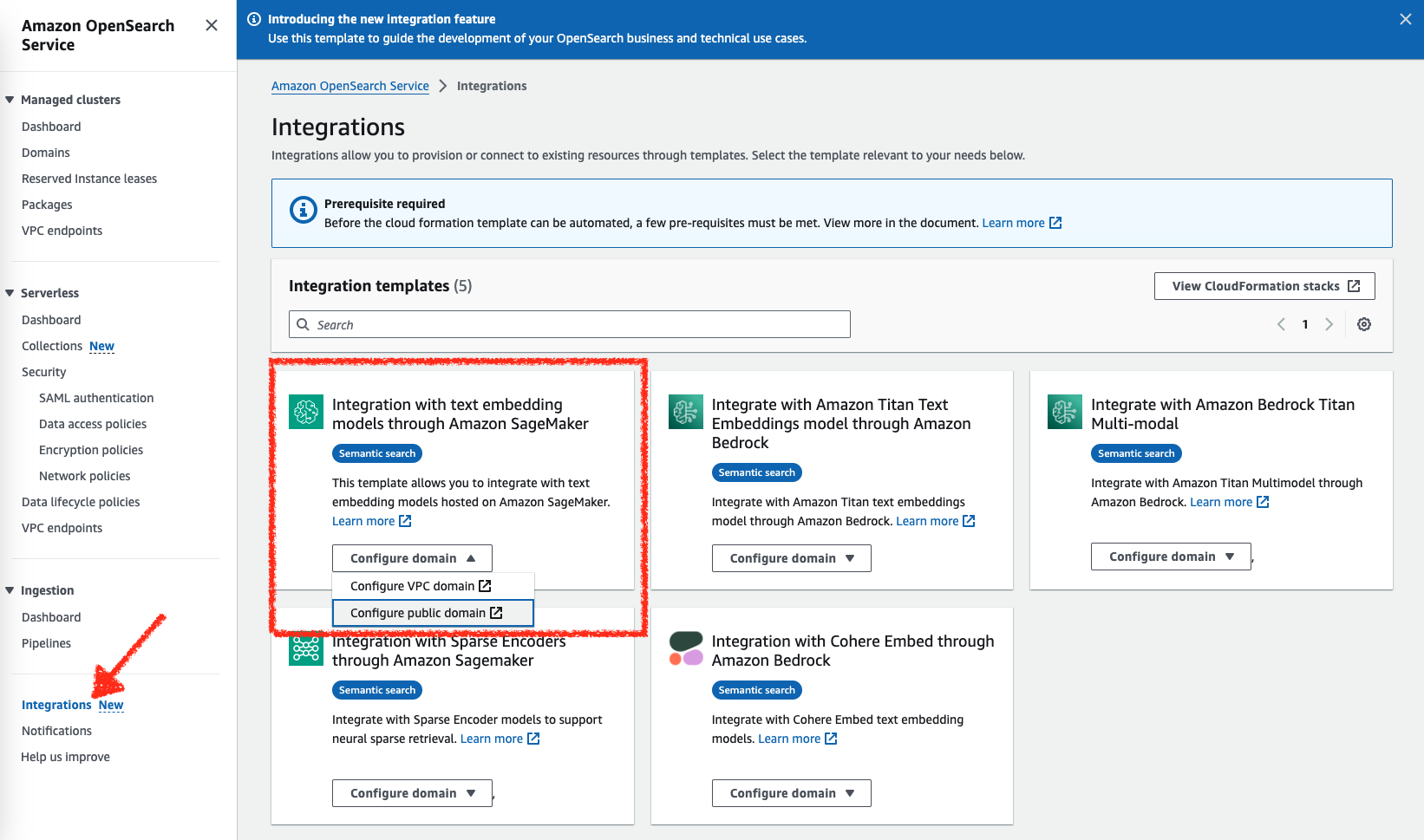

CloudFormation 模板集成可在 Amazon OpenSearch Service 控制台中找到。从左侧导航窗格中,选择集成,如下图所示。

选择以下选项之一将模型部署到 Amazon SageMaker。

选项 1:将预训练模型部署到 Amazon SageMaker

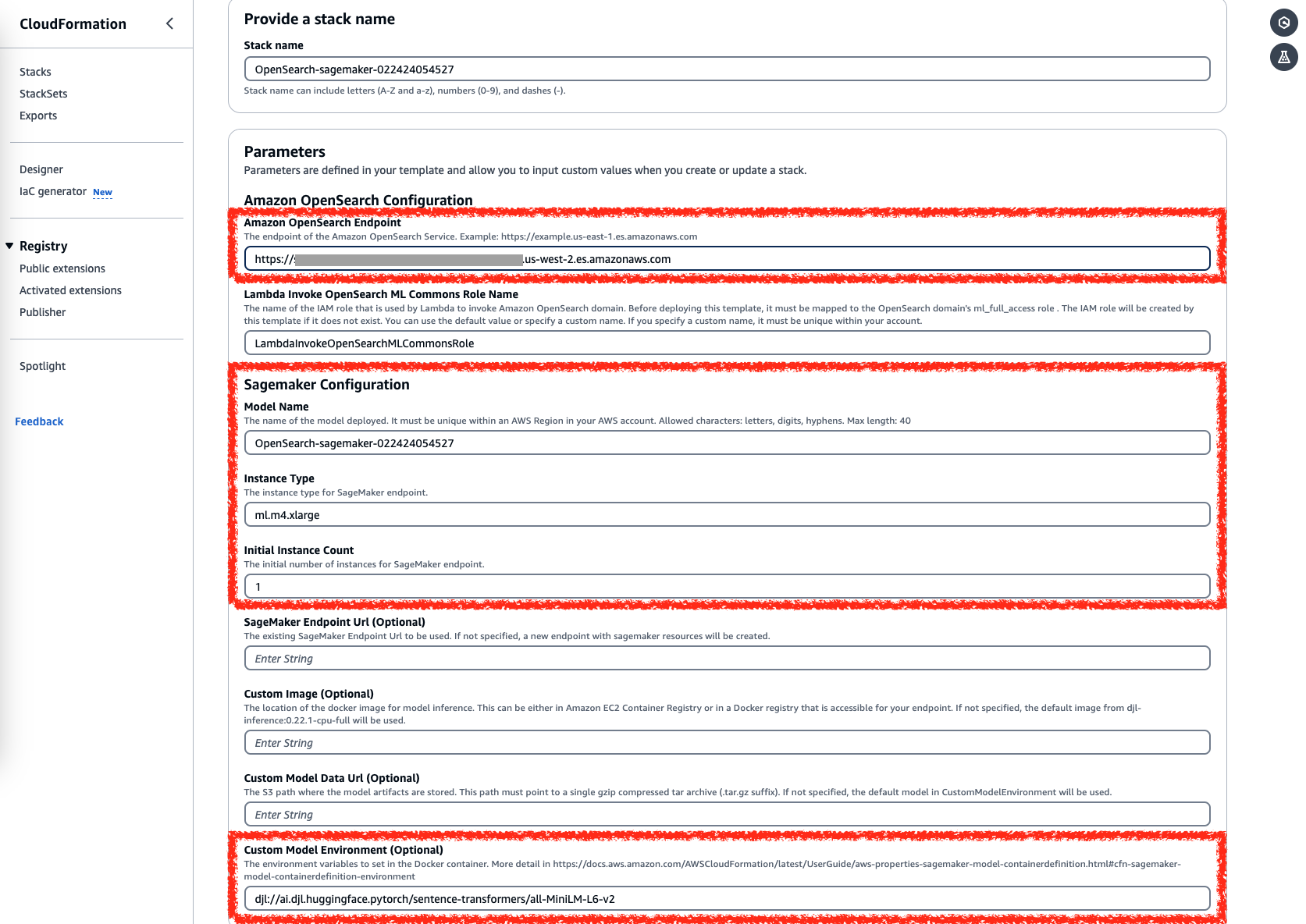

您可以从 Deep Java Library 模型存储库部署预训练的 Hugging Face 句子转换器嵌入模型,如下图所示。

填写以下字段,所有其他字段保持默认值

- 输入您的 Amazon OpenSearch Endpoint。

- 使用默认的 SageMaker Configuration 快速开始,或者您可以根据需要修改它。有关支持的 Amazon SageMaker 实例类型,请参阅 Amazon SageMaker 文档。

- 将 SageMaker Endpoint Url 字段留空。如果您提供 URL,则模型将不会部署到 Amazon SageMaker,并且不会创建新的推理端点。

- 将 Custom Image 字段留空。默认镜像为

djl-inference:0.22.1-cpu-full。有关可用镜像,请参阅 AWS Deep Learning Containers。 - 将 Custom Model Data Url 字段留空。

- Custom Model Environment 字段默认为

djl://ai.djl.huggingface.pytorch/sentence-transformers/all-MiniLM-L6-v2。有关支持的模型列表,请参阅支持的模型。

选项 2:使用现有 SageMaker 推理端点

如果您已有 SageMaker 推理端点,可以使用该端点配置模型,如下图所示。

填写以下字段,所有其他字段保持默认值

- 输入您的 Amazon OpenSearch Endpoint。

- 输入您的 SageMaker Endpoint Url。

- 将 Custom Image、Custom Model Data Url 和 Custom Model Environment 字段留空。

输出

部署后,您可以在 CloudFormation 堆栈的输出中找到 OpenSearch AI 连接器和模型 ID。

如果发生错误,请按照以下步骤查看日志

- 打开 Amazon SageMaker 控制台。

- 导航到CloudWatch Logs部分。

- 搜索包含(或与)您的 CloudFormation 堆栈名称关联的日志组。

支持的模型

以下 Hugging Face 句子转换器嵌入模型可在 Deep Java Library 模型存储库中找到

djl://ai.djl.huggingface.pytorch/sentence-transformers/LaBSE/

djl://ai.djl.huggingface.pytorch/sentence-transformers/all-MiniLM-L12-v1/

djl://ai.djl.huggingface.pytorch/sentence-transformers/all-MiniLM-L12-v2/

djl://ai.djl.huggingface.pytorch/sentence-transformers/all-MiniLM-L6-v1/

djl://ai.djl.huggingface.pytorch/sentence-transformers/all-MiniLM-L6-v2/

djl://ai.djl.huggingface.pytorch/sentence-transformers/all-distilroberta-v1/

djl://ai.djl.huggingface.pytorch/sentence-transformers/all-mpnet-base-v1/

djl://ai.djl.huggingface.pytorch/sentence-transformers/all-mpnet-base-v2/

djl://ai.djl.huggingface.pytorch/sentence-transformers/all-roberta-large-v1/

djl://ai.djl.huggingface.pytorch/sentence-transformers/allenai-specter/

djl://ai.djl.huggingface.pytorch/sentence-transformers/bert-base-nli-cls-token/

djl://ai.djl.huggingface.pytorch/sentence-transformers/bert-base-nli-max-tokens/

djl://ai.djl.huggingface.pytorch/sentence-transformers/bert-base-nli-mean-tokens/

djl://ai.djl.huggingface.pytorch/sentence-transformers/bert-base-nli-stsb-mean-tokens/

djl://ai.djl.huggingface.pytorch/sentence-transformers/bert-base-wikipedia-sections-mean-tokens/

djl://ai.djl.huggingface.pytorch/sentence-transformers/bert-large-nli-cls-token/

djl://ai.djl.huggingface.pytorch/sentence-transformers/bert-large-nli-max-tokens/

djl://ai.djl.huggingface.pytorch/sentence-transformers/bert-large-nli-mean-tokens/

djl://ai.djl.huggingface.pytorch/sentence-transformers/bert-large-nli-stsb-mean-tokens/

djl://ai.djl.huggingface.pytorch/sentence-transformers/clip-ViT-B-32-multilingual-v1/

djl://ai.djl.huggingface.pytorch/sentence-transformers/distilbert-base-nli-mean-tokens/

djl://ai.djl.huggingface.pytorch/sentence-transformers/distilbert-base-nli-stsb-mean-tokens/

djl://ai.djl.huggingface.pytorch/sentence-transformers/distilbert-base-nli-stsb-quora-ranking/

djl://ai.djl.huggingface.pytorch/sentence-transformers/distilbert-multilingual-nli-stsb-quora-ranking/

djl://ai.djl.huggingface.pytorch/sentence-transformers/distiluse-base-multilingual-cased-v1/

djl://ai.djl.huggingface.pytorch/sentence-transformers/facebook-dpr-ctx_encoder-multiset-base/

djl://ai.djl.huggingface.pytorch/sentence-transformers/facebook-dpr-ctx_encoder-single-nq-base/

djl://ai.djl.huggingface.pytorch/sentence-transformers/facebook-dpr-question_encoder-multiset-base/

djl://ai.djl.huggingface.pytorch/sentence-transformers/facebook-dpr-question_encoder-single-nq-base/

djl://ai.djl.huggingface.pytorch/sentence-transformers/msmarco-MiniLM-L-12-v3/

djl://ai.djl.huggingface.pytorch/sentence-transformers/msmarco-MiniLM-L-6-v3/

djl://ai.djl.huggingface.pytorch/sentence-transformers/msmarco-MiniLM-L12-cos-v5/

djl://ai.djl.huggingface.pytorch/sentence-transformers/msmarco-MiniLM-L6-cos-v5/

djl://ai.djl.huggingface.pytorch/sentence-transformers/msmarco-bert-base-dot-v5/

djl://ai.djl.huggingface.pytorch/sentence-transformers/msmarco-bert-co-condensor/

djl://ai.djl.huggingface.pytorch/sentence-transformers/msmarco-distilbert-base-dot-prod-v3/

djl://ai.djl.huggingface.pytorch/sentence-transformers/msmarco-distilbert-base-tas-b/

djl://ai.djl.huggingface.pytorch/sentence-transformers/msmarco-distilbert-base-v2/

djl://ai.djl.huggingface.pytorch/sentence-transformers/msmarco-distilbert-base-v3/

djl://ai.djl.huggingface.pytorch/sentence-transformers/msmarco-distilbert-base-v4/

djl://ai.djl.huggingface.pytorch/sentence-transformers/msmarco-distilbert-cos-v5/

djl://ai.djl.huggingface.pytorch/sentence-transformers/msmarco-distilbert-dot-v5/

djl://ai.djl.huggingface.pytorch/sentence-transformers/msmarco-distilbert-multilingual-en-de-v2-tmp-lng-aligned/

djl://ai.djl.huggingface.pytorch/sentence-transformers/msmarco-distilbert-multilingual-en-de-v2-tmp-trained-scratch/

djl://ai.djl.huggingface.pytorch/sentence-transformers/msmarco-distilroberta-base-v2/

djl://ai.djl.huggingface.pytorch/sentence-transformers/msmarco-roberta-base-ance-firstp/

djl://ai.djl.huggingface.pytorch/sentence-transformers/msmarco-roberta-base-v2/

djl://ai.djl.huggingface.pytorch/sentence-transformers/msmarco-roberta-base-v3/

djl://ai.djl.huggingface.pytorch/sentence-transformers/multi-qa-MiniLM-L6-cos-v1/

djl://ai.djl.huggingface.pytorch/sentence-transformers/multi-qa-MiniLM-L6-dot-v1/

djl://ai.djl.huggingface.pytorch/sentence-transformers/multi-qa-distilbert-cos-v1/

djl://ai.djl.huggingface.pytorch/sentence-transformers/multi-qa-distilbert-dot-v1/

djl://ai.djl.huggingface.pytorch/sentence-transformers/nli-bert-base/

djl://ai.djl.huggingface.pytorch/sentence-transformers/nli-bert-large-max-pooling/

djl://ai.djl.huggingface.pytorch/sentence-transformers/nli-distilbert-base/

djl://ai.djl.huggingface.pytorch/sentence-transformers/nli-distilroberta-base-v2/

djl://ai.djl.huggingface.pytorch/sentence-transformers/nli-roberta-base-v2/

djl://ai.djl.huggingface.pytorch/sentence-transformers/nli-roberta-large/

djl://ai.djl.huggingface.pytorch/sentence-transformers/nq-distilbert-base-v1/

djl://ai.djl.huggingface.pytorch/sentence-transformers/paraphrase-MiniLM-L12-v2/

djl://ai.djl.huggingface.pytorch/sentence-transformers/paraphrase-MiniLM-L3-v2/

djl://ai.djl.huggingface.pytorch/sentence-transformers/paraphrase-MiniLM-L6-v2/

djl://ai.djl.huggingface.pytorch/sentence-transformers/paraphrase-TinyBERT-L6-v2/

djl://ai.djl.huggingface.pytorch/sentence-transformers/paraphrase-albert-base-v2/

djl://ai.djl.huggingface.pytorch/sentence-transformers/paraphrase-albert-small-v2/

djl://ai.djl.huggingface.pytorch/sentence-transformers/paraphrase-distilroberta-base-v2/

djl://ai.djl.huggingface.pytorch/sentence-transformers/paraphrase-multilingual-MiniLM-L12-v2/

djl://ai.djl.huggingface.pytorch/sentence-transformers/paraphrase-multilingual-mpnet-base-v2/

djl://ai.djl.huggingface.pytorch/sentence-transformers/paraphrase-xlm-r-multilingual-v1/

djl://ai.djl.huggingface.pytorch/sentence-transformers/quora-distilbert-base/

djl://ai.djl.huggingface.pytorch/sentence-transformers/quora-distilbert-multilingual/

djl://ai.djl.huggingface.pytorch/sentence-transformers/roberta-base-nli-mean-tokens/

djl://ai.djl.huggingface.pytorch/sentence-transformers/roberta-base-nli-stsb-mean-tokens/

djl://ai.djl.huggingface.pytorch/sentence-transformers/roberta-large-nli-mean-tokens/

djl://ai.djl.huggingface.pytorch/sentence-transformers/roberta-large-nli-stsb-mean-tokens/

djl://ai.djl.huggingface.pytorch/sentence-transformers/stsb-bert-base/

djl://ai.djl.huggingface.pytorch/sentence-transformers/stsb-bert-large/

djl://ai.djl.huggingface.pytorch/sentence-transformers/stsb-distilbert-base/

djl://ai.djl.huggingface.pytorch/sentence-transformers/stsb-distilroberta-base-v2/

djl://ai.djl.huggingface.pytorch/sentence-transformers/stsb-roberta-base-v2/

djl://ai.djl.huggingface.pytorch/sentence-transformers/stsb-roberta-base/

djl://ai.djl.huggingface.pytorch/sentence-transformers/stsb-roberta-large/

djl://ai.djl.huggingface.pytorch/sentence-transformers/stsb-xlm-r-multilingual/

djl://ai.djl.huggingface.pytorch/sentence-transformers/use-cmlm-multilingual/

djl://ai.djl.huggingface.pytorch/sentence-transformers/xlm-r-100langs-bert-base-nli-stsb-mean-tokens/

djl://ai.djl.huggingface.pytorch/sentence-transformers/xlm-r-bert-base-nli-stsb-mean-tokens/

djl://ai.djl.huggingface.pytorch/sentence-transformers/xlm-r-distilroberta-base-paraphrase-v1/