工作流教程

您可以使用思维链(CoT)代理自动化常见用例的设置,例如对话式聊天。代理负责编排和运行机器学习模型和工具。工具执行一组特定的任务。本页面提供了设置 CoT 代理的完整示例。有关代理和工具的更多信息,请参阅代理和工具

此设置需要以下一系列 API 请求,其中已预置的资源将用于后续请求。以下列表概述了此工作流所需的步骤。步骤名称与模板中的名称对应

- 在集群上部署模型

create_connector_1:创建一个连接到外部托管模型的连接器。register_model_2:使用您创建的连接器注册模型。deploy_model_3:部署模型。

- 使用已部署的模型进行推理

- 设置执行特定任务的多个工具

list_index_tool:设置一个工具以获取索引信息。ml_model_tool:设置一个机器学习(ML)模型工具。

- 设置一个或多个使用工具组合的代理

sub_agent:创建一个使用list_index_tool的代理。

- 设置代表这些代理的工具

agent_tool:封装sub_agent,以便您可以将其用作工具。

root_agent:设置一个根代理,它可以将任务委托给工具或另一个代理。

- 设置执行特定任务的多个工具

以下部分详细介绍了这些步骤。有关完整的工作流模板,请参阅完整的 YAML 工作流模板。

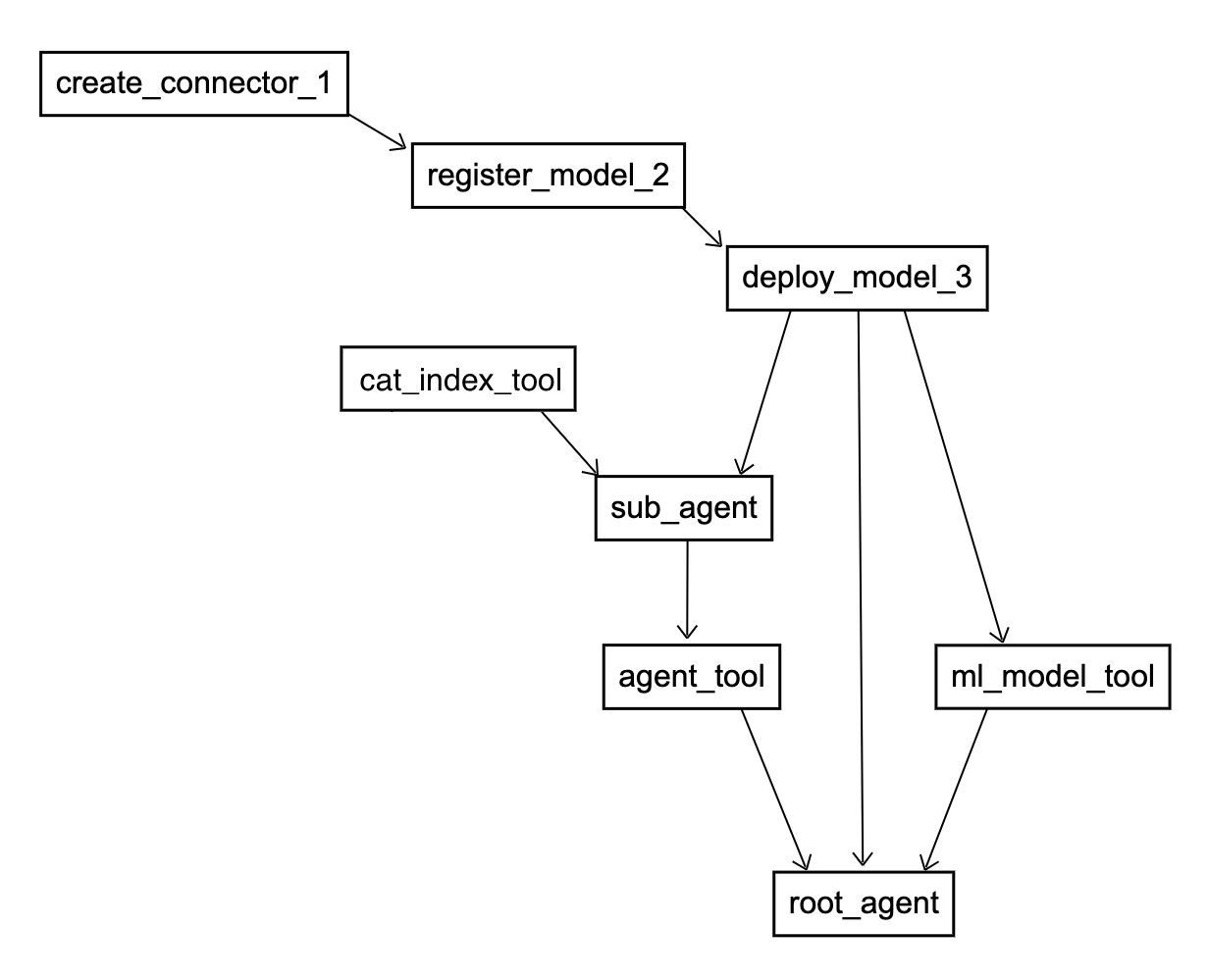

工作流图

上一节中描述的工作流被组织成一个模板。请注意,您可以以多种方式对步骤进行排序。在示例模板中,ml_model_tool 步骤在 root_agent 步骤之前指定,但您可以在 deploy_model_3 步骤之后和 root_agent 步骤之前的任何点指定它。下图显示了 OpenSearch 为模板中指定顺序的所有步骤创建的有向无环图 (DAG)。

1. 在集群上部署模型

要在集群上部署模型,您需要创建一个到模型的连接器,注册模型,然后部署模型。

create_connector_1

工作流的第一步是创建一个连接到外部托管模型的连接器(在以下示例中,此步骤称为 create_connector_1)。user_inputs 字段的内容与 ML Commons 创建连接器 API 完全匹配

nodes:

- id: create_connector_1

type: create_connector

user_inputs:

name: OpenAI Chat Connector

description: The connector to public OpenAI model service for GPT 3.5

version: '1'

protocol: http

parameters:

endpoint: api.openai.com

model: gpt-3.5-turbo

credential:

openAI_key: '12345'

actions:

- action_type: predict

method: POST

url: https://${parameters.endpoint}/v1/chat/completions

当您创建连接器时,OpenSearch 会返回一个 connector_id,您需要此 ID 来注册模型。

register_model_2

注册模型时,previous_node_inputs 字段会指示 OpenSearch 从 create_connector_1 步骤的输出中获取所需的 connector_id。 注册模型 API 所需的其他输入包含在 user_inputs 字段中

- id: register_model_2

type: register_remote_model

previous_node_inputs:

create_connector_1: connector_id

user_inputs:

name: openAI-gpt-3.5-turbo

function_name: remote

description: test model

此步骤的输出是 model_id。然后您必须将注册的模型部署到集群。

deploy_model_3

部署模型 API 需要前一步骤中的 model_id,如 previous_node_inputs 字段中指定的那样

- id: deploy_model_3

type: deploy_model

# This step needs the model_id produced as an output of the previous step

previous_node_inputs:

register_model_2: model_id

直接使用部署模型 API 时,会返回一个任务 ID,需要使用 任务 API 来确定部署何时完成。自动化工作流消除了手动状态检查,并直接返回最终的 model_id。

步骤排序

要按顺序排列这些步骤,您必须通过图中的边将它们连接起来。当一个步骤中存在 previous_node_input 字段时,OpenSearch 会自动为该步骤创建一个包含 source 和 dest 字段的节点。source 的输出是 dest 的必需输入。例如,register_model_2 步骤需要 create_connector_1 步骤中的 connector_id。类似地,deploy_model_3 步骤需要 register_model_2 步骤中的 model_id。因此,OpenSearch 按如下方式创建图中的前两条边,以将输出与所需输入匹配,并在缺少所需输入时引发错误

edges:

- source: create_connector_1

dest: register_model_2

- source: register_model_2

dest: deploy_model_3

如果您定义了 previous_node_inputs,那么定义边是可选的。

2. 使用已部署的模型进行推理

CoT 代理可以在工具中使用已部署的模型。此步骤并非严格对应于 API,而是注册代理 API 所需主体的一部分。这简化了注册请求,并允许在多个代理中重用同一工具。有关代理和工具的更多信息,请参阅代理和工具。

list_index_tool

您可以配置其他工具供 CoT 代理使用。例如,您可以如下配置一个 list_index_tool。此工具不依赖于任何先前的步骤

- id: list_index_tool

type: create_tool

user_inputs:

name: ListIndexTool

type: ListIndexTool

parameters:

max_iteration: 5

sub_agent

要在代理配置中使用 list_index_tool,请在代理的 previous_node_inputs 字段中将其指定为工具之一。您可以根据需要将其他工具添加到 previous_node_inputs。代理还需要一个大型语言模型 (LLM) 来与工具进行推理。LLM 由 llm.model_id 字段定义。本示例假定将使用来自 deploy_model_3 步骤的 model_id。但是,如果已部署另一个模型,则可以将该先前部署模型的 model_id 包含在 user_inputs 字段中。

- id: sub_agent

type: register_agent

previous_node_inputs:

# When llm.model_id is not present this can be used as a fallback value

deploy-model-3: model_id

list_index_tool: tools

user_inputs:

name: Sub Agent

type: conversational

description: this is a test agent

parameters:

hello: world

llm.parameters:

max_iteration: '5'

stop_when_no_tool_found: 'true'

memory:

type: conversation_index

app_type: chatbot

OpenSearch 将自动创建以下边,以便代理可以从上一个节点检索字段

- source: list_index_tool

dest: sub_agent

- source: deploy_model_3

dest: sub_agent

agent_tool

您可以将一个代理作为另一个代理的工具使用。注册代理会在输出中产生一个 agent_id。以下步骤定义了一个使用前一步骤中的 agent_id 的工具

- id: agent_tool

type: create_tool

previous_node_inputs:

sub_agent: agent_id

user_inputs:

name: AgentTool

type: AgentTool

description: Agent Tool

parameters:

max_iteration: 5

OpenSearch 会自动创建边连接,因为此步骤指定了 previous_node_input

- source: sub_agent

dest: agent_tool

ml_model_tool

工具可以引用 ML 模型。此示例从上一步部署的模型中获取所需的 model_id

- id: ml_model_tool

type: create_tool

previous_node_inputs:

deploy-model-3: model_id

user_inputs:

name: MLModelTool

type: MLModelTool

alias: language_model_tool

description: A general tool to answer any question.

parameters:

prompt: Answer the question as best you can.

response_filter: choices[0].message.content

OpenSearch 会自动创建边以使用 previous_node_input

- source: deploy-model-3

dest: ml_model_tool

root_agent

对话式聊天应用程序将与单个根代理通信,该代理在其 tools 字段中包含 ML 模型工具和代理工具。它还将从已部署的模型中获取 llm.model_id。一些代理要求工具以特定顺序排列,这可以通过在用户输入中包含 tools_order 字段来强制执行

- id: root_agent

type: register_agent

previous_node_inputs:

deploy-model-3: model_id

ml_model_tool: tools

agent_tool: tools

user_inputs:

name: DEMO-Test_Agent_For_CoT

type: conversational

description: this is a test agent

parameters:

prompt: Answer the question as best you can.

llm.parameters:

max_iteration: '5'

stop_when_no_tool_found: 'true'

tools_order: ['agent_tool', 'ml_model_tool']

memory:

type: conversation_index

app_type: chatbot

OpenSearch 会自动为 previous_node_input 源创建边

- source: deploy-model-3

dest: root_agent

- source: ml_model_tool

dest: root_agent

- source: agent_tool

dest: root_agent

有关 OpenSearch 为此工作流创建的完整 DAG,请参阅工作流图。

完整的 YAML 工作流模板

以下是包含所有 provision 工作流步骤的最终 YAML 格式模板

YAML 模板

# This template demonstrates provisioning the resources for a

# Chain-of-Thought chat bot

name: tool-register-agent

description: test case

use_case: REGISTER_AGENT

version:

template: 1.0.0

compatibility:

- 2.12.0

- 3.0.0

workflows:

# This workflow defines the actions to be taken when the Provision Workflow API is used

provision:

nodes:

# The first three nodes create a connector to a remote model, registers and deploy that model

- id: create_connector_1

type: create_connector

user_inputs:

name: OpenAI Chat Connector

description: The connector to public OpenAI model service for GPT 3.5

version: '1'

protocol: http

parameters:

endpoint: api.openai.com

model: gpt-3.5-turbo

credential:

openAI_key: '12345'

actions:

- action_type: predict

method: POST

url: https://${parameters.endpoint}/v1/chat/completions

- id: register_model_2

type: register_remote_model

previous_node_inputs:

create_connector_1: connector_id

user_inputs:

# deploy: true could be added here instead of the deploy step below

name: openAI-gpt-3.5-turbo

description: test model

- id: deploy_model_3

type: deploy_model

previous_node_inputs:

register_model_2: model_id

# For example purposes, the model_id obtained as the output of the deploy_model_3 step will be used

# for several below steps. However, any other deployed model_id can be used for those steps.

# This is one example tool from the Agent Framework.

- id: list_index_tool

type: create_tool

user_inputs:

name: ListIndexTool

type: ListIndexTool

parameters:

max_iteration: 5

# This simple agent only has one tool, but could be configured with many tools

- id: sub_agent

type: register_agent

previous_node_inputs:

deploy-model-3: model_id

list_index_tool: tools

user_inputs:

name: Sub Agent

type: conversational

parameters:

hello: world

llm.parameters:

max_iteration: '5'

stop_when_no_tool_found: 'true'

memory:

type: conversation_index

app_type: chatbot

# An agent can be used itself as a tool in a nested relationship

- id: agent_tool

type: create_tool

previous_node_inputs:

sub_agent: agent_id

user_inputs:

name: AgentTool

type: AgentTool

parameters:

max_iteration: 5

# An ML Model can be used as a tool

- id: ml_model_tool

type: create_tool

previous_node_inputs:

deploy-model-3: model_id

user_inputs:

name: MLModelTool

type: MLModelTool

alias: language_model_tool

parameters:

prompt: Answer the question as best you can.

response_filter: choices[0].message.content

# This final agent will be the interface for the CoT chat user

# Using a flow agent type tools_order matters

- id: root_agent

type: register_agent

previous_node_inputs:

deploy-model-3: model_id

ml_model_tool: tools

agent_tool: tools

user_inputs:

name: DEMO-Test_Agent

type: flow

parameters:

prompt: Answer the question as best you can.

llm.parameters:

max_iteration: '5'

stop_when_no_tool_found: 'true'

tools_order: ['agent_tool', 'ml_model_tool']

memory:

type: conversation_index

app_type: chatbot

# These edges are all automatically created with previous_node_input

edges:

- source: create_connector_1

dest: register_model_2

- source: register_model_2

dest: deploy_model_3

- source: list_index_tool

dest: sub_agent

- source: deploy_model_3

dest: sub_agent

- source: sub_agent

dest: agent_tool

- source: deploy-model-3

dest: ml_model_tool

- source: deploy-model-3

dest: root_agent

- source: ml_model_tool

dest: root_agent

- source: agent_tool

dest: root_agent

完整的 JSON 工作流模板

以下是相同的 JSON 格式模板

JSON 模板

{

"name": "tool-register-agent",

"description": "test case",

"use_case": "REGISTER_AGENT",

"version": {

"template": "1.0.0",

"compatibility": [

"2.12.0",

"3.0.0"

]

},

"workflows": {

"provision": {

"nodes": [

{

"id": "create_connector_1",

"type": "create_connector",

"user_inputs": {

"name": "OpenAI Chat Connector",

"description": "The connector to public OpenAI model service for GPT 3.5",

"version": "1",

"protocol": "http",

"parameters": {

"endpoint": "api.openai.com",

"model": "gpt-3.5-turbo"

},

"credential": {

"openAI_key": "12345"

},

"actions": [

{

"action_type": "predict",

"method": "POST",

"url": "https://${parameters.endpoint}/v1/chat/completions"

}

]

}

},

{

"id": "register_model_2",

"type": "register_remote_model",

"previous_node_inputs": {

"create_connector_1": "connector_id"

},

"user_inputs": {

"name": "openAI-gpt-3.5-turbo",

"description": "test model"

}

},

{

"id": "deploy_model_3",

"type": "deploy_model",

"previous_node_inputs": {

"register_model_2": "model_id"

}

},

{

"id": "list_index_tool",

"type": "create_tool",

"user_inputs": {

"name": "ListIndexTool",

"type": "ListIndexTool",

"parameters": {

"max_iteration": 5

}

}

},

{

"id": "sub_agent",

"type": "register_agent",

"previous_node_inputs": {

"deploy-model-3": "llm.model_id",

"list_index_tool": "tools"

},

"user_inputs": {

"name": "Sub Agent",

"type": "conversational",

"parameters": {

"hello": "world"

},

"llm.parameters": {

"max_iteration": "5",

"stop_when_no_tool_found": "true"

},

"memory": {

"type": "conversation_index"

},

"app_type": "chatbot"

}

},

{

"id": "agent_tool",

"type": "create_tool",

"previous_node_inputs": {

"sub_agent": "agent_id"

},

"user_inputs": {

"name": "AgentTool",

"type": "AgentTool",

"parameters": {

"max_iteration": 5

}

}

},

{

"id": "ml_model_tool",

"type": "create_tool",

"previous_node_inputs": {

"deploy-model-3": "model_id"

},

"user_inputs": {

"name": "MLModelTool",

"type": "MLModelTool",

"alias": "language_model_tool",

"parameters": {

"prompt": "Answer the question as best you can.",

"response_filter": "choices[0].message.content"

}

}

},

{

"id": "root_agent",

"type": "register_agent",

"previous_node_inputs": {

"deploy-model-3": "llm.model_id",

"ml_model_tool": "tools",

"agent_tool": "tools"

},

"user_inputs": {

"name": "DEMO-Test_Agent",

"type": "flow",

"parameters": {

"prompt": "Answer the question as best you can."

},

"llm.parameters": {

"max_iteration": "5",

"stop_when_no_tool_found": "true"

},

"tools_order": [

"agent_tool",

"ml_model_tool"

],

"memory": {

"type": "conversation_index"

},

"app_type": "chatbot"

}

}

],

"edges": [

{

"source": "create_connector_1",

"dest": "register_model_2"

},

{

"source": "register_model_2",

"dest": "deploy_model_3"

},

{

"source": "list_index_tool",

"dest": "sub_agent"

},

{

"source": "deploy_model_3",

"dest": "sub_agent"

},

{

"source": "sub_agent",

"dest": "agent_tool"

},

{

"source": "deploy-model-3",

"dest": "ml_model_tool"

},

{

"source": "deploy-model-3",

"dest": "root_agent"

},

{

"source": "ml_model_tool",

"dest": "root_agent"

},

{

"source": "agent_tool",

"dest": "root_agent"

}

]

}

}

}

后续步骤

要了解有关代理和工具的更多信息,请参阅代理和工具。